The Interim Final Rule of the Health Information Technology for Economic and Clinical Health (HITECH) Act was passed by Congress in February of 2009. Under this act, eligible providers will be given financial rewards if they demonstrate "meaningful use" of "certified" Electronic Health Record (EHR) technologies.

Therefore there is a big incentive for health care vendors to offer solutions that meet the criteria described in the law. More precisely, the associated regulation provided by the Department of Health and Human Services describes the set of standards, implementation, specifications and certification for Electronic Health Record (EHR) technology.

As a Software Architect, I was curious to see whether Service Oriented Architecture (SOA) or Web Services in general were mentioned in these documents.

The definition of an EHR Module includes an open list of services such as electronic health information exchange, clinical decision support, public health and health authorities information queries, quality measure reporting etc.

In the transport standards section, both SOAP and RESTful Web services protocols are described. However Service Oriented Architecture (SOA) is never explicitly described or cited. No reference how these services might be discovered and orchestrated in a "meaningful way". I would assume that the reason is that the law makers and regulators wanted to be as vague as possible on the underlying technologies for an EHR and its components.

The technical aspect of "meaningful use" is specified more precisely when associated with interoperability, functionality, utility, data confidentiality and integrity of the data, security of the health information system in general.

These characteristics are not necessarily specific to SOA, but to any good health care software and solution design.

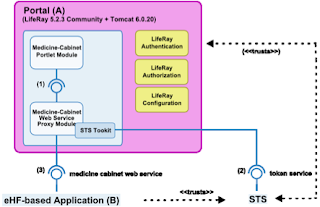

Still, the following paragraph seems to describe a solution that could be best implemented using a Service Oriented Architecture: "As another example, a subscription to an application service provider (ASP) for electronic prescribing could be an EHR Module" where software is offered as a service (SaaS). This looks more like the description of an emerging SOA rather than a full grid enabled SOA.

It will be up to the solutions providers to come up with relevant products and tools to maximize the return on investment (ROI) of the tax payer's money and the professionals and organizations eligible for ARRA/HITECH.

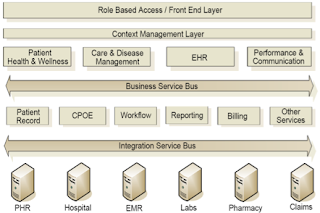

SOA will definitively be part of the mix since it gives the ability create, offer and maintain large numbers of complex EHR Software solutions (SaaS) that have a high level of modularization and interoperability.

Further developments toward a complete SOA stack such as offering a Platform as a Service (PaaS) and even the underlying Infrastructure as a Service (IaaS) in the cloud will face more resistance in a domain known for a lot of legacy systems and concerns about privacy and security.

The Object Management Group (OMG) is organizing a conference this summer on the topic of "SOA in Healthcare: Improving Health through Technology: The role of SOA on the path to meaningful use". It will be interesting to see what healthcare providers, payers, public health organizations and solution providers from both the public and private sector will have to say on this topic.

The Object Management Group (OMG) is organizing a conference this summer on the topic of "SOA in Healthcare: Improving Health through Technology: The role of SOA on the path to meaningful use". It will be interesting to see what healthcare providers, payers, public health organizations and solution providers from both the public and private sector will have to say on this topic.